Building the First Generative UI API: Technical Architecture and Design Decisions Behind C1

In a previous blog, we introduced Generative UI and why it represents a monumental shift in human-computer interaction. Generative UI gives us an opportunity to redefine what interfaces mean at a fundamental level, and how they should be designed and implemented. Advancements in Artificial Intelligence (AI) now enable us to harness the generative capabilities of Large Language Models (LLMs) for personalized, intelligent, and curated User Interfaces at runtime.

In this technical deep-dive, we'll cover how we, at Thesys, are making Generative UI more accessible and practical across multiple domains with the development of our C1 API - the first production-ready Generative UI API.

Core Design Principles for Production Generative UI

As we analyzed the capabilities of modern LLMs for Generative UI applications, we realized that integrating LLMs for dynamic interface generation wouldn't be straightforward. To establish a benchmark and ensure exceptional user and developer experience, we defined four non-negotiable requirements before building the C1 Generative UI API:

Speed of Inference

Generative UI must match the speed expectations of text generation. Users expect chatbot-like responsiveness when interfaces generate dynamically. Our C1 API middleware needed to be lightweight, fast, and token-efficient to ensure Generative UI feels as natural as conversational AI.

Interactivity

Every generated interface must be fully interactive. We treat interactivity as a fundamental layer of Generative UI, ensuring interfaces never feel static. This principle distinguishes production Generative UI from simple layout generation - users expect dynamic components that respond to their actions.

Responsiveness

Generative UI components must adapt to any screen size. LLMs typically power full-screen chat experiences, but replacing text with UI elements requires explicit attention to responsive design. Generative UI interfaces must work seamlessly across desktop, tablet, and mobile viewports.

Cohesiveness

Generated interfaces must integrate seamlessly with existing design systems. Every Generative UI component should feel native to the application's design system. This ensures generated interfaces don't appear disconnected from surrounding elements, maintaining visual consistency and user trust.

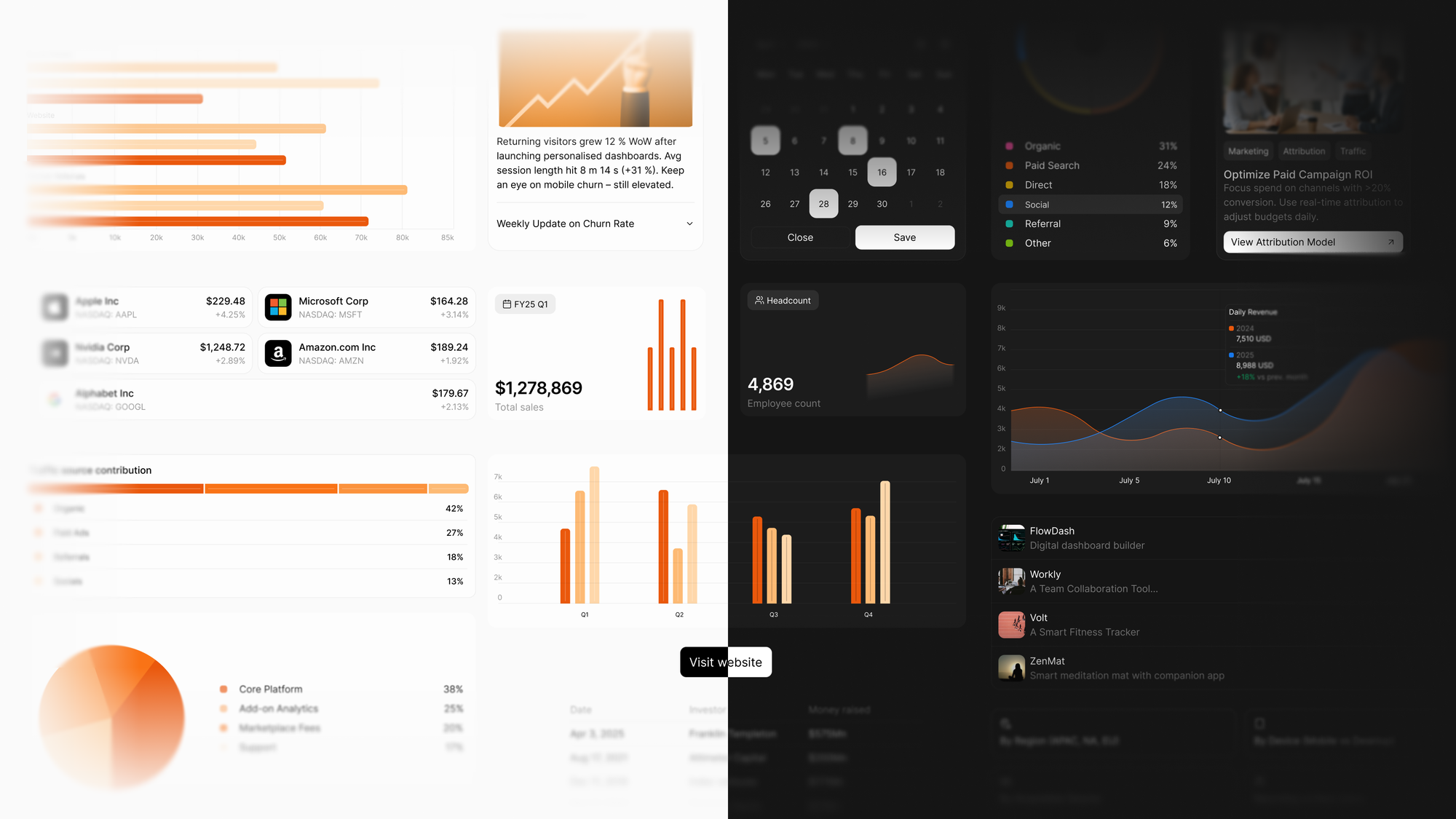

Crayon Design System

To implement these principles, we built Crayon - our open-source React design system that serves as the atomic foundation for all Generative UI generation. Crayon provides the standardized building blocks that the C1 API uses to construct dynamic interfaces.

Design System Architecture for Generative UI

Crayon represents a holistic collection of fundamental, interactive, and responsive elements that can be composed into Generative UI interfaces independently. These "atoms" of our design system enable the C1 API to generate interfaces that are standardized, accessible, and optimized for dynamic generation.

Each Crayon component is built with Radix primitives for accessibility and shadcn/ui patterns for developer familiarity, then enhanced specifically for Generative UI use cases. This approach ensures every generated interface component is:

- Accessible by default - WCAG compliance built-in

- Responsive across devices - Mobile-first design principles

- Interactive out of the box - Event handlers and state management included

- Consistent with design tokens - Unified theming and styling

Why Build a Custom Design System for Generative UI?

Rather than adapting existing component libraries, we built Crayon to ensure the highest level of control, quality, and generation speed for Generative UI applications. The C1 API internalizes all Crayon components and uses LLMs to intelligently select and configure the appropriate components for each user intent.

This tight integration between our Generative UI API and design system ensures that C1 provides a complete, production-ready Generative UI experience aligned with our four core principles.

C1 API

With our principles and design system established, we developed the C1 API - the simplest way to programmatically introduce Generative UI into any application. The API intelligently adapts LLM responses into structured UI representations that render as interactive Crayon components at runtime.

OpenAI Compatibility for Generative UI

We made the strategic decision to make C1 fully OpenAI API compatible to ensure seamless developer onboarding and standardized integration patterns. Regardless of which C1 models users select, the API request format, response structure, and error handling always follow the OpenAI API specification.

This compatibility means developers can integrate Generative UI capabilities into existing applications with minimal code changes, treating C1 as an enhanced OpenAI endpoint that returns structured interfaces instead of plain text.

System Prompts and Tool Integrations

The C1 API supports system prompts and tool calling to give developers precise control over Generative UI behavior. System prompts enable teams to define consistent interface patterns, enforce design guidelines, and customize generation behavior for specific use cases.

Tool integration allows Generative UI components to connect with external APIs, databases, and services, enabling dynamic interfaces that pull real-time data and perform actions based on user interactions.

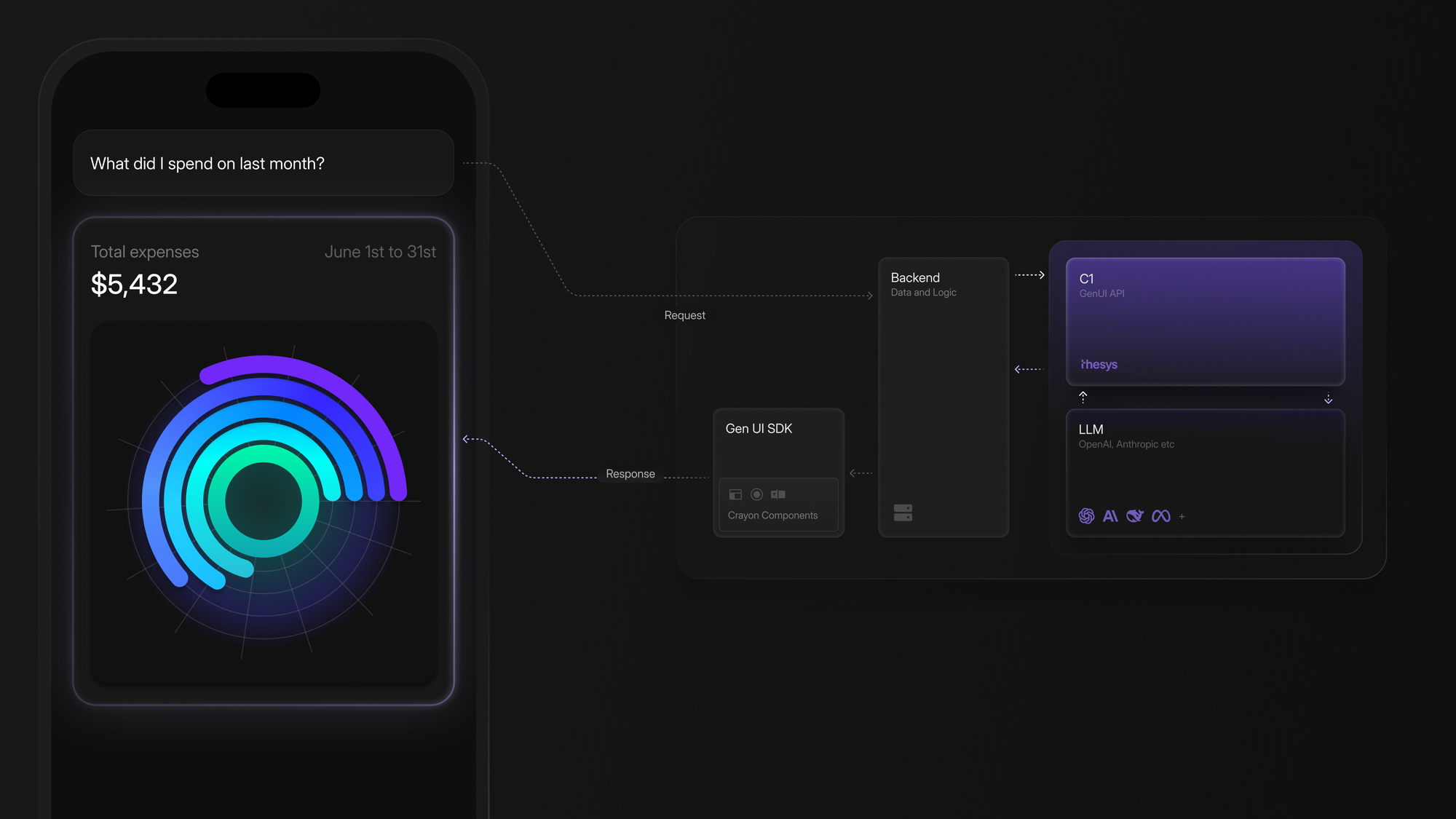

React SDK

The C1 GenUI SDK bridges the gap between API responses and rendered interfaces, translating C1 API outputs into live React. The SDK handles the complex orchestration of streaming responses, component instantiation, and state management required for production Generative UI.

Partial Rendering for Real-Time Generative UI

The SDK supports partial rendering, allowing users to see Generative UI interfaces building progressively as the C1 API streams responses. This capability is crucial for meeting our inference speed requirements and provides appropriate visual feedback during generation.

Since Crayon is built in React, the GenUI SDK produces standard React elements - the key difference being these components are contextually relevant to user intent and generated dynamically at runtime rather than statically defined.

Stitching It All Together: The Complete Generative UI Pipeline

Understanding how Crayon, C1 API, and GenUI SDK work together is crucial for implementing production Generative UI. Let's walk through the complete pipeline from user intent to rendered interface:

The Generative UI Request Flow

- User Intent Capture: A user query or interaction triggers a request to your application

- C1 API Processing: Your application sends the intent to C1 (OpenAI-compatible endpoint)

- LLM Analysis: C1's underlying LLM analyzes the intent and determines appropriate UI components

- Structured Response: Instead of text, C1 returns a structured representation of Crayon components

- SDK Rendering: GenUI SDK interprets the response and renders live React components

- Progressive Display: Components stream and render progressively as the response arrives

Component Selection and Configuration

The C1 API doesn't just randomly select Crayon components - it intelligently matches user intent to appropriate interface patterns:

- Data queries → Tables, charts, and visualization components

- Form requirements → Input fields, selectors, and validation

- Action requests → Buttons, modals, and interactive elements

- Information display → Cards, lists, and structured layouts

Each component comes pre-configured with appropriate props, styling, and event handlers based on the specific context.

Real-Time State Management

The GenUI SDK handles complex state management scenarios that arise in Generative UI:

- Component lifecycle during streaming responses

- Event handling for user interactions with generated components

- State persistence across component updates and re-renders

- Error boundaries for graceful handling of generation failures

Getting Started with Production Generative UI

For developers ready to implement Generative UI in their applications, we've created comprehensive resources and examples at https://demo.thesys.dev. Here's how to start building with the C1 Generative UI API:

Quick Setup for Generative UI Development

- Get the C1 Generative UI template

> git clone https://github.com/thesysdev/template-c1-component-next.git

- Install dependencies

> cd template-c1-component-next

> npm install

- Set your API key (get one at https://console.thesys.dev/keys)

> export THESYS_API_KEY=<your-api-key>

- Start building with Generative UI

> npm run dev

For comprehensive guidance on implementing custom elements, configuring system prompts, and enabling tool calls in your Generative UI applications, visit our documentation at https://docs.thesys.dev/guides/overview.